Try with ReLU activation for hidden layers

# import Keras & Tensorflow

import tensorflow as tf

import keras

# Load image data

fashion_mnist = keras.datasets.fashion_mnist

(x_train, y_train), (x_test, y_test) = fashion_mnist.load_data()

class_names = ["T-shirt/top", "Trouser", "Pullover", "Dress", "Coat", "Sandal", "Shirt", "Sneaker", "Bag", "Ankle boot"]

# Data preparation:

# Map intensities from [0--255] to 0.0--1.0

x_train = x_train / 255.0

x_test = x_test / 255.0

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

model.add(keras.layers.Dense(300, activation="relu"))

model.add(keras.layers.Dense(100, activation="relu"))

model.add(keras.layers.Dense(10, activation="softmax"))

model.compile(loss = "sparse_categorical_crossentropy", optimizer="sgd", metrics = ["accuracy"])

ReLU_history = model.fit(x_train, y_train, epochs = 30, validation_split=0.1)

model.evaluate(x_test, y_test)

[0.3353878855705261, 0.8816999793052673]

Try with tanh activation for hidden layers

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

model.add(keras.layers.Dense(300, activation="tanh"))

model.add(keras.layers.Dense(100, activation="tanh"))

model.add(keras.layers.Dense(10, activation="softmax"))

model.summary()

model.compile(loss = "sparse_categorical_crossentropy", optimizer="sgd", metrics = ["accuracy"])

Tanh_history = model.fit(x_train, y_train, epochs = 30, validation_split=0.1)

model.evaluate(x_test, y_test)

[0.34416577219963074, 0.8784000277519226]Try with LeakyReLU activation for hidden layers

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

model.add(keras.layers.Dense(300, activation=keras.layers.LeakyReLU()))

model.add(keras.layers.Dense(100, activation=keras.layers.LeakyReLU()))

model.add(keras.layers.Dense(10, activation="softmax"))

model.summary()

model.compile(loss = "sparse_categorical_crossentropy", optimizer="sgd", metrics = ["accuracy"])

LeakyReLU_history = model.fit(x_train, y_train, epochs = 30, validation_split=0.1)

model.evaluate(x_test, y_test)

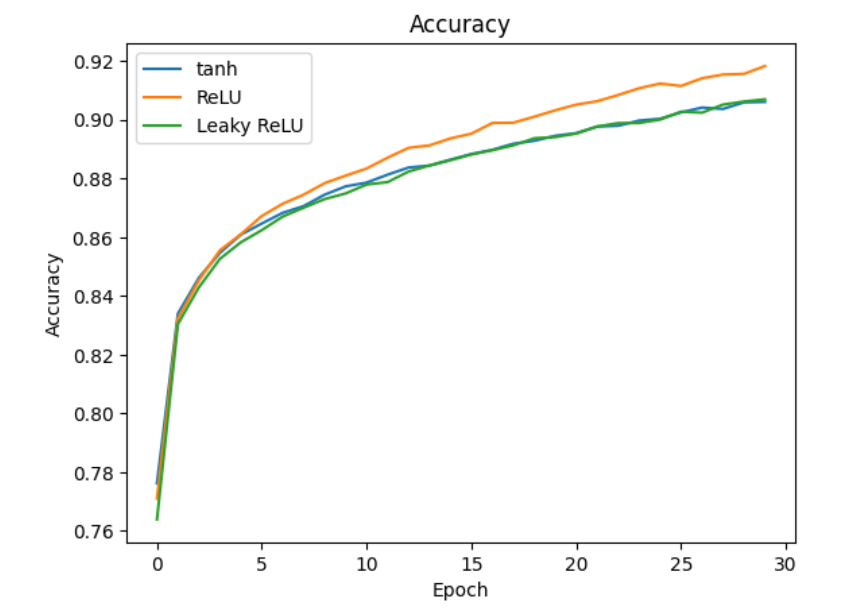

[0.3527831435203552, 0.8751999735832214]Visualise loss/accuracy during training

import matplotlib.pyplot as plt

plt.plot(Tanh_history.history['accuracy'])

plt.plot(ReLU_history.history['accuracy'])

plt.plot(LeakyReLU_history.history['accuracy'])

plt.title('Accuracy')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['tanh', 'ReLU', 'Leaky ReLU'], loc='upper left')

plt.show()

plt.plot(Tanh_history.history['loss'])

plt.plot(ReLU_history.history['loss'])

plt.plot(LeakyReLU_history.history['loss'])

plt.title('Loss')

plt.ylabel('Loss')

plt.xlabel('Epoch')

plt.legend(['tanh', 'ReLU', 'Leaky ReLU'], loc='upper left')

plt.show()分析

在损失函数图中,Leaky ReLU激活函数的损失值下降得最快,说明它在训练数据上的表现最好。而在准确率图中,ReLU和Leaky ReLU的准确率趋势非常接近,并且通常高于tanh激活函数。

- Leaky ReLU激活函数在这个特定任务中可能是最佳选择,因为它具有较低的损失和较高的准确率。

- ReLU激活函数也表现良好,尤其是在准确率方面,与Leaky ReLU非常接近。

- tanh激活函数在这个例子中的表现略逊一筹,无论是在损失值还是准确率上。